Apple's Silent Shift to Real on-Device AI

The A19 Pro is the first iPhone chip that behaves like a scaled-down AI workstation. Neural accelerators inside every GPU core, a new wireless stack, a faster modem, and a bigger memory system all push the iPhone into true on-device intelligence with no cloud dependency. This generation isn’t about adding AI tricks; it’s about the phone itself becoming the processor for everything you do. And at the other end of the lineup, the M5 takes the same architecture and blows it up into a full workstation chip for Macs. Together they define Apple’s new direction: fast, private, local AI across the entire ecosystem.

Every year, Apple relaunches the same pizza with a different topping - faster chip, better camera, more customisations, more magic and every year people buy the craze & move on. But this time, under the glossy marketing, Apple has moved the iPhone into a completely new class; a device that thinks, adapts and responds with zero resiliance on the cloud. While Google and OpenAI are fighting data-centre wars, Apple has shifted the entire iPhone into a class of devices that are much lesser dependant on the cloud for intelligence

What’s actually happening under the hood?

NVIDIA's H100 isn't fast because it's gigantic size, it is fast because of the tensor cores designed to do 1 particular thing insanely well - multiplying huge chunks of data in parallel. Every LLM, every diffusion model every flashy AI online relies on those tensor cores running trillions of operations per second. They accelerate matrix maths the same way a Bugatti goes 0-200, by dumping everything into raw parallel compute.

The A19 introduces something almost unbelievable : Neural Accelerators embedded directly into every single GPU core. They took these matrix engines, shrunk them into a thermal envelope that fits inside a 5.6mm thick phone. Instead of having AI as a side module on the chipset, it fuses the Neural hardware straight into the GPU pipeline. Boom! Graphics and ML now share the same space. The phone no longerhas to pick between running a game or a model; it can handle both in real time because the underlying hardware no longer treats them as separate workloads.

Dynamic Cashing

One of the most underrated parts of this generation is dynamic cashing. Under the hood, the A19 family still uses a 6-core CPU, but the Pro variant bumps the caching so aggressively that performance cores behave in a completely different class. The system-level cache jumps to 32 MB, which is partly why the Pro model can sustain high AI throughput without cooking itself. The A19 Pro’s GPU reallocates cache resources in real time depending on what workload is happening; ray tracing, 3D rendering, multi-threaded compute or ML inference.

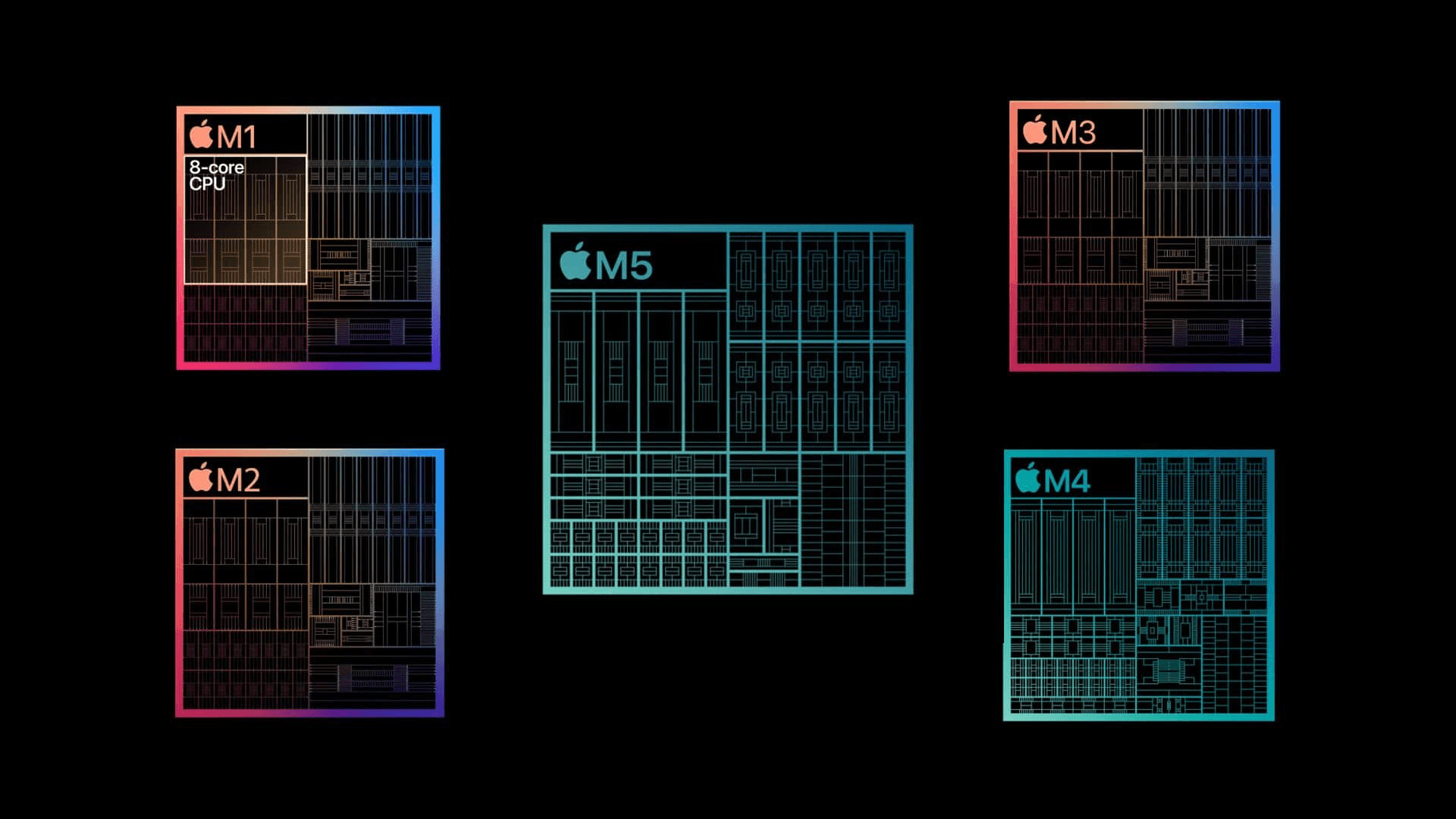

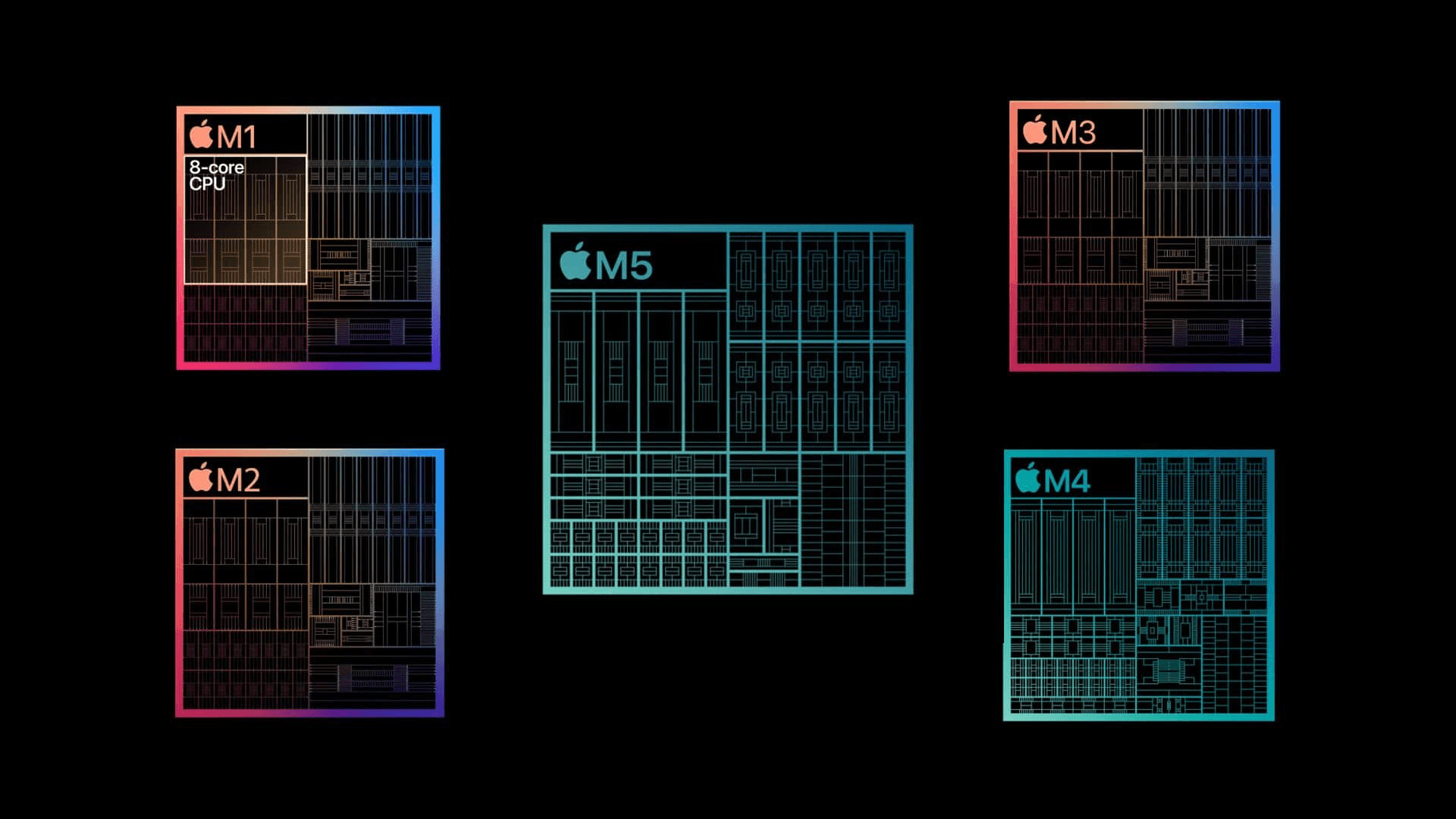

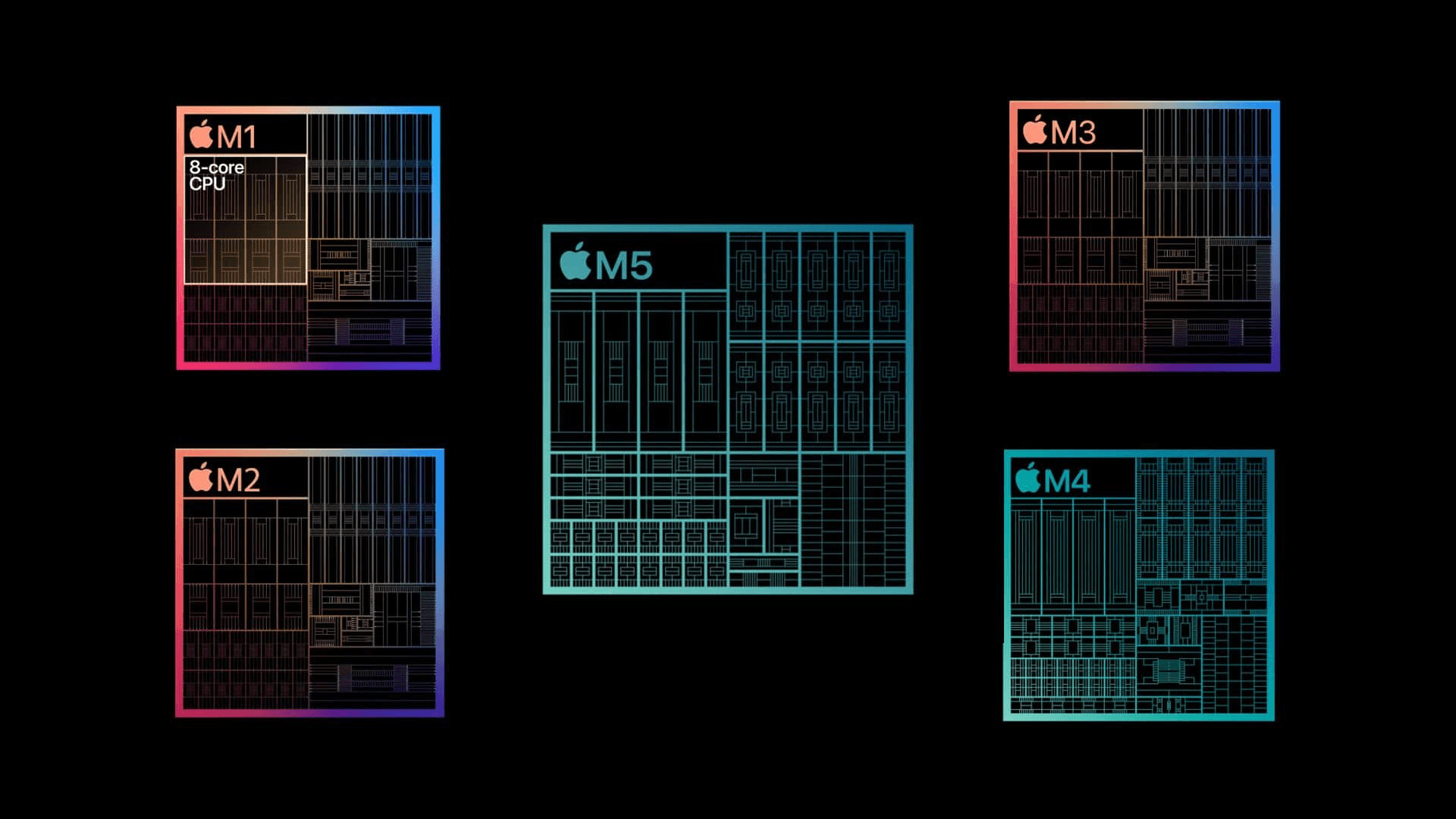

The M5 Bloodline

Now imagine everything the A19 is doing and then scale it up by 10. That's M5.

It packs up to a 12-core CPU and a 12-core GPU, both running on wider buses, more aggressive dynamic caching, and far higher sustained wattage. It’s roughly

10 - 12% faster in single-core,

15% in multi-core and

5% in GPU throughput over the already ridiculous M4.

When a user tested M5 : Max Weinbach

Here's a guy explaining the entire performance review : iPhone 17 series Performance Review

& if you like to read on the go : here's a Reddit post

N1 Wireless Chip + C1X Modem

Even the network stack is moving forward with the new N1 wireless chip and the C1X modem, although Apple still refuses to give mmWave another chance. The N1 is interesting because it shifts how the phone understands its environment. Apple is now using Wi-Fi access points as a core part of location awareness, meaning your phone can position itself without firing up GPS every few seconds which is great for battery life. It’s also the first time Apple has fully replaced the third-party wireless chip with something custom-tuned for their power management and latency targets.

Paired with the C1X modem, the phone holds signal better, transitions between bands faster, and stays cooler during heavy network use. Yes, mmWave is gone, but honestly, mmWave has always been more marketing than reality. Apple is simply doubling down on reliable, efficient mid-band 5G and Wi-Fi 7 instead of chasing theoretical gigabit peaks nobody ever hits. The result is boring on paper, but noticeably smoother in daily use.

Where does Apple stand?

The direction is obvious: AI has stopped being a wow feature. It’s becoming infrastructure. Something you don’t think about, because it’s everywhere - inside your camera, your keyboard, your notifications, your browser, your messages, your maps, and now thanks to chips like the A19 Pro inside the silicon itself.

Here’s the landscape Apple is entering: not brute force training GPUs, not cloud assisted mobile chips, but something in between.

A19 Pro vs H100 vs X Elite vs Tensor G4

Chip | Category | AI Strength | Weakness | Ideal Use |

|---|---|---|---|---|

Apple A19 Pro | Mobile | GPU-integrated neural accelerators, instant on-device AI | Not built for large models | Privacy-focused personal AI, offline apps |

Snapdragon X Elite | Laptop | Strong NPU, high CPU/GPU | Cloud dependence for big models | Windows AI PCs |

Google Tensor G4 | Mobile | Great for Google Photos, speech, ML features | Weak thermal performance | Cloud-assisted AI (Gemini) |

This is where Apple is positioning itself. Not in the hype driven let’s make the biggest model race, but in the everyday, always on, invisible intelligence that runs directly on your device. While the world plays with futuristic demos in the cloud, Apple is turning the iPhone into a machine that uses AI quietly; 0 latency, 0 data leak, 0 load times, and 0 internet requirement.

For years Apple has been accused of being behind in AI.

With the A19 Pro, they didn't catch up.

They changed the race. This is the edge era.

Every year, Apple relaunches the same pizza with a different topping - faster chip, better camera, more customisations, more magic and every year people buy the craze & move on. But this time, under the glossy marketing, Apple has moved the iPhone into a completely new class; a device that thinks, adapts and responds with zero resiliance on the cloud. While Google and OpenAI are fighting data-centre wars, Apple has shifted the entire iPhone into a class of devices that are much lesser dependant on the cloud for intelligence

What’s actually happening under the hood?

NVIDIA's H100 isn't fast because it's gigantic size, it is fast because of the tensor cores designed to do 1 particular thing insanely well - multiplying huge chunks of data in parallel. Every LLM, every diffusion model every flashy AI online relies on those tensor cores running trillions of operations per second. They accelerate matrix maths the same way a Bugatti goes 0-200, by dumping everything into raw parallel compute.

The A19 introduces something almost unbelievable : Neural Accelerators embedded directly into every single GPU core. They took these matrix engines, shrunk them into a thermal envelope that fits inside a 5.6mm thick phone. Instead of having AI as a side module on the chipset, it fuses the Neural hardware straight into the GPU pipeline. Boom! Graphics and ML now share the same space. The phone no longerhas to pick between running a game or a model; it can handle both in real time because the underlying hardware no longer treats them as separate workloads.

Dynamic Cashing

One of the most underrated parts of this generation is dynamic cashing. Under the hood, the A19 family still uses a 6-core CPU, but the Pro variant bumps the caching so aggressively that performance cores behave in a completely different class. The system-level cache jumps to 32 MB, which is partly why the Pro model can sustain high AI throughput without cooking itself. The A19 Pro’s GPU reallocates cache resources in real time depending on what workload is happening; ray tracing, 3D rendering, multi-threaded compute or ML inference.

The M5 Bloodline

Now imagine everything the A19 is doing and then scale it up by 10. That's M5.

It packs up to a 12-core CPU and a 12-core GPU, both running on wider buses, more aggressive dynamic caching, and far higher sustained wattage. It’s roughly

10 - 12% faster in single-core,

15% in multi-core and

5% in GPU throughput over the already ridiculous M4.

When a user tested M5 : Max Weinbach

Here's a guy explaining the entire performance review : iPhone 17 series Performance Review

& if you like to read on the go : here's a Reddit post

N1 Wireless Chip + C1X Modem

Even the network stack is moving forward with the new N1 wireless chip and the C1X modem, although Apple still refuses to give mmWave another chance. The N1 is interesting because it shifts how the phone understands its environment. Apple is now using Wi-Fi access points as a core part of location awareness, meaning your phone can position itself without firing up GPS every few seconds which is great for battery life. It’s also the first time Apple has fully replaced the third-party wireless chip with something custom-tuned for their power management and latency targets.

Paired with the C1X modem, the phone holds signal better, transitions between bands faster, and stays cooler during heavy network use. Yes, mmWave is gone, but honestly, mmWave has always been more marketing than reality. Apple is simply doubling down on reliable, efficient mid-band 5G and Wi-Fi 7 instead of chasing theoretical gigabit peaks nobody ever hits. The result is boring on paper, but noticeably smoother in daily use.

Where does Apple stand?

The direction is obvious: AI has stopped being a wow feature. It’s becoming infrastructure. Something you don’t think about, because it’s everywhere - inside your camera, your keyboard, your notifications, your browser, your messages, your maps, and now thanks to chips like the A19 Pro inside the silicon itself.

Here’s the landscape Apple is entering: not brute force training GPUs, not cloud assisted mobile chips, but something in between.

A19 Pro vs H100 vs X Elite vs Tensor G4

Chip | Category | AI Strength | Weakness | Ideal Use |

|---|---|---|---|---|

Apple A19 Pro | Mobile | GPU-integrated neural accelerators, instant on-device AI | Not built for large models | Privacy-focused personal AI, offline apps |

Snapdragon X Elite | Laptop | Strong NPU, high CPU/GPU | Cloud dependence for big models | Windows AI PCs |

Google Tensor G4 | Mobile | Great for Google Photos, speech, ML features | Weak thermal performance | Cloud-assisted AI (Gemini) |

This is where Apple is positioning itself. Not in the hype driven let’s make the biggest model race, but in the everyday, always on, invisible intelligence that runs directly on your device. While the world plays with futuristic demos in the cloud, Apple is turning the iPhone into a machine that uses AI quietly; 0 latency, 0 data leak, 0 load times, and 0 internet requirement.

For years Apple has been accused of being behind in AI.

With the A19 Pro, they didn't catch up.

They changed the race. This is the edge era.

Every year, Apple relaunches the same pizza with a different topping - faster chip, better camera, more customisations, more magic and every year people buy the craze & move on. But this time, under the glossy marketing, Apple has moved the iPhone into a completely new class; a device that thinks, adapts and responds with zero resiliance on the cloud. While Google and OpenAI are fighting data-centre wars, Apple has shifted the entire iPhone into a class of devices that are much lesser dependant on the cloud for intelligence

What’s actually happening under the hood?

NVIDIA's H100 isn't fast because it's gigantic size, it is fast because of the tensor cores designed to do 1 particular thing insanely well - multiplying huge chunks of data in parallel. Every LLM, every diffusion model every flashy AI online relies on those tensor cores running trillions of operations per second. They accelerate matrix maths the same way a Bugatti goes 0-200, by dumping everything into raw parallel compute.

The A19 introduces something almost unbelievable : Neural Accelerators embedded directly into every single GPU core. They took these matrix engines, shrunk them into a thermal envelope that fits inside a 5.6mm thick phone. Instead of having AI as a side module on the chipset, it fuses the Neural hardware straight into the GPU pipeline. Boom! Graphics and ML now share the same space. The phone no longerhas to pick between running a game or a model; it can handle both in real time because the underlying hardware no longer treats them as separate workloads.

Dynamic Cashing

One of the most underrated parts of this generation is dynamic cashing. Under the hood, the A19 family still uses a 6-core CPU, but the Pro variant bumps the caching so aggressively that performance cores behave in a completely different class. The system-level cache jumps to 32 MB, which is partly why the Pro model can sustain high AI throughput without cooking itself. The A19 Pro’s GPU reallocates cache resources in real time depending on what workload is happening; ray tracing, 3D rendering, multi-threaded compute or ML inference.

The M5 Bloodline

Now imagine everything the A19 is doing and then scale it up by 10. That's M5.

It packs up to a 12-core CPU and a 12-core GPU, both running on wider buses, more aggressive dynamic caching, and far higher sustained wattage. It’s roughly

10 - 12% faster in single-core,

15% in multi-core and

5% in GPU throughput over the already ridiculous M4.

When a user tested M5 : Max Weinbach

Here's a guy explaining the entire performance review : iPhone 17 series Performance Review

& if you like to read on the go : here's a Reddit post

N1 Wireless Chip + C1X Modem

Even the network stack is moving forward with the new N1 wireless chip and the C1X modem, although Apple still refuses to give mmWave another chance. The N1 is interesting because it shifts how the phone understands its environment. Apple is now using Wi-Fi access points as a core part of location awareness, meaning your phone can position itself without firing up GPS every few seconds which is great for battery life. It’s also the first time Apple has fully replaced the third-party wireless chip with something custom-tuned for their power management and latency targets.

Paired with the C1X modem, the phone holds signal better, transitions between bands faster, and stays cooler during heavy network use. Yes, mmWave is gone, but honestly, mmWave has always been more marketing than reality. Apple is simply doubling down on reliable, efficient mid-band 5G and Wi-Fi 7 instead of chasing theoretical gigabit peaks nobody ever hits. The result is boring on paper, but noticeably smoother in daily use.

Where does Apple stand?

The direction is obvious: AI has stopped being a wow feature. It’s becoming infrastructure. Something you don’t think about, because it’s everywhere - inside your camera, your keyboard, your notifications, your browser, your messages, your maps, and now thanks to chips like the A19 Pro inside the silicon itself.

Here’s the landscape Apple is entering: not brute force training GPUs, not cloud assisted mobile chips, but something in between.

A19 Pro vs H100 vs X Elite vs Tensor G4

Chip | Category | AI Strength | Weakness | Ideal Use |

|---|---|---|---|---|

Apple A19 Pro | Mobile | GPU-integrated neural accelerators, instant on-device AI | Not built for large models | Privacy-focused personal AI, offline apps |

Snapdragon X Elite | Laptop | Strong NPU, high CPU/GPU | Cloud dependence for big models | Windows AI PCs |

Google Tensor G4 | Mobile | Great for Google Photos, speech, ML features | Weak thermal performance | Cloud-assisted AI (Gemini) |

This is where Apple is positioning itself. Not in the hype driven let’s make the biggest model race, but in the everyday, always on, invisible intelligence that runs directly on your device. While the world plays with futuristic demos in the cloud, Apple is turning the iPhone into a machine that uses AI quietly; 0 latency, 0 data leak, 0 load times, and 0 internet requirement.

For years Apple has been accused of being behind in AI.

With the A19 Pro, they didn't catch up.

They changed the race. This is the edge era.

Be the first to know about every new letter.

No spam, unsubscribe anytime.